How to Think About Ranking on Google Search in the Future

2024 note: The original title of this post was “How to think about ranking on Google Search in 2021”. I have not changed any of the substance since then; I only removed some corny gifs and tweaked a few headings. Today I updated the featured image. In the coming months, I will be adding new sections to address Generative AI and SGE. – Mike

Table of Contents

- Same old things, just a different year

- Google algorithm updates: The typical cycle

- Search is not a science

- Choosing the right SEO strategy

- SERPs are going to start responding to real-world events

- Not all rankings factors matter all the time

- All websites are different

Same Old Things, Just a Different Year

Every year I see new articles about rankings factors, and how to optimize for them in the current year.

During my first couple of years as an SEO specialist I got excited about them because I was eager to learn. Now, they’re starting to sound like a broken record.

The main difference is that the year is updated to the current; most of the strategies and info are stuff we already know. Just now I opened a new tab and searched “SEO rankings factors…..” and autofill added “2022”. This means that the search engine, Google in this case, has identified that people expect to learn about new rankings factors on an annual basis.

I think this is largely a result of Google’s development and release cycles. Each year they (publicly) release a couple of small updates and then one big one. SEO’s then freak out and want to know how they should respond to it.

Are there any new rankings factors?

How strong are they?

Are there new strategies we can pitch our clients?

Google Algorithm Updates: The Typical Cycle

Over the past 5 years I’ve seen breaking news of Google Algorithm updates more times than I can count. Every time it goes something like this:

- SEMRush, Moz, and others record large spikes in SERP volatility (a metric ranging 1-9 calculated by what’s essentially a search results seismograph).

- A large group of SEOs/Webmasters see huge drops in their rankings over a 1-2 day period. They rush to Twitter and tweet at Gary Illyes and John Mueller about possible algorithm updates, who then become super annoyed fast.

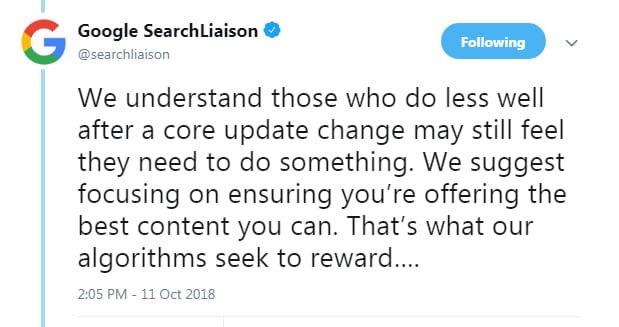

- Google’s search team addresses these concerns with the same boilerplate algorithm update advice:

Over the next month, as more information about the confirmed or unconfirmed update comes out, influencers and popular SEO sites start rolling out their articles about everything you need to know about the update. Also, they (subjectively) tell you how to respond to it.

This is not me denouncing anyone for capitalizing on the news. I do think we need this stuff.

By my use of “subjective”, I do not mean to imply that they’re wrong. These people are smart, listen to them, but always be a skeptic. Intuition and gut feelings are two very powerful SEO tools.

When I was just starting out I had Google Alerts set up for all kinds of stuff like that. I learned a ton from industry names like Rand Fishkin and Neil Patel (who’s in the above results) and like 90% of the stuff that I read years ago is still relevant in 2020. Which brings me to my point:

Not a whole lot ever really changes. I mean…in recent memory there has been stuff like shifting to mobile first indexing and JSON-LD becoming the officially recognized semantic markup for structured data. But did either of those things fundamentally change the way we do SEO?

Not really, right?

We made sure our sites were responsive (also not super slow) and re-wrote our structured data. The reality is that Google changes all the time, and most of their updates we don’t even realize happen. Google’s “boilerplate” response to concerns about algorithm updates is the same every time for a reason!

We suggest ensuring that you’re offering the best content you can.

– Google, every algorithm update

Search is not a science

Everything that you’ll be looking for links to throughout the rest of this post can be found at the very end. For all of this to make sense, I recommend reading the full article…don’t just skim.

When there are significant changes being made to the way they rank pages, Google lets us know about it.

HTTPS was one of these a while back, and page experience (site speed) has been a big one in recent years. Page experience is one they’ve been iterating on (Web Core Vitals), which they just updated us about in December.

The issue with site speed as a rankings factor has been one of measurement, which makes sense. Initially, overall speed was measured as an index called Speed Index. Moving forward, they’ll now be using Core Web Vitals to indicate speed. I’ll drop a link at the bottom of this post.

Ultimately, which page should rank first, second, and so forth is simply subjective. So is search intent; Google can’t ever truly know what a user’s actual intent was behind their query.

Which page should be ranking for which query?

Google doesn’t just go “ah this page is obviously the best page, clearly it should be #1”. They rely on tons of signals to give them a best guess.

Larry Page and Sergey Brin back in the day, probably around the time Google blew up.

Some of these are likely the traditional “rankings” signals, but Google has gotten immensely smarter since Larry Page and Sergey Brin invented PageRank. They have tons of signals! Everything is a signal!

Even the signals that are not literally the rankings signals probably talk to (at least a little bit) the big shot signals to help them make a final decision.

We also do know that there is at least some degree of human intuition goes into it.

Maybe not for every query and search result, but probably the super high volume stuff, perhaps in cases where the first page candidates all have similar “signals” and the algorithms call it a toss up.

We know this because Google does have people called Search Quality Evaluators, and their guidelines are publicly available. I’ll also drop a link to this later.

So if there are human arbiters judging web pages, then in my mind you have to think about things like design, UX, IA, and helpful tools (like price calculators and product comparison tables) as indirect “rankings factors”.

SEOs need a mindset shift. Before I dive into what exactly that shift looks like, let’s understand what the current mindset is.

In 2020, I posted this on LinkedIn:

“We should do things that are good because they’re good, regardless of if they’re rankings factors!” That was my central argument.

Below is one of the replies I got that perfectly articulates the current mindset of a majority of SEOs.

Kelvin explains it perfectly! Clients want guaranteed results! There’s a paradox here though, which is that SEO is not a quick thing. It takes a lot of time and effort, and plenty of nurturing.

If we’re looking for a quick turnaround ROI on search we have PPC for that. The overall counter argument is that since SEOs need to guarantee success for their clients, then they should optimize for the rankings factors we know exist.

I don’t disagree with this, we should always do what we know works, but what happens if after you check every box your client’s rankings still suck?

Choosing the right SEO strategy

You can’t take a “one size fits all” approach to SEO. Let’s say you have 15 clients and to optimize their site you go down your checklist. The results are going to be different for each site. Rankings may go up for some, but not others. It all depends on the site.

The biggest problem I have with the way we think about rankings factors is that many think of them like checklists. Our industry has tons of checklists and definitive lists. In my view, it encourages bad habits, and I worry about how this will affect new SEOs, but that’s a whole other discussion.

No two websites are the same

We should not be thinking about rankings factors as boxes to check. Instead, we should think of them as tools that we pull out on an individual basis when a situation warrants them.

Here’s John Mueller describing how Google’s algorithms work (at a high level) on a recent episode of Search Off the Record. SOTR is a new podcast that the Search Relations team at Google is trying out. I’ve watched every episode so far and love what they’re doing.

Google takes the query that a user has and they try to understand it by splitting it up into many smaller parts, which are like signals.

These signals they get about what the user is looking for go through a massive network where there’s different knots along the way that kind of let some individual parts pass, or they kind of reroute them a little bit.

In the end they come up with a simple ranking for the different web pages that will show for that kind of a query. With this kind of a network there are lots of different paths that could lead to the same place.

So what is John saying here? Aside from reiterating that Google doesn’t just have one rankings algorithm, but many, that are all a part of a larger neural network.

To me this means a couple of things, which we’ll explore in the next section.

SERPs are going to start responding to real world events

Priority #1: Showing you what you’re looking for, not what others want you to find

Consider the following scenario:

Imagine you’re planning a vacation and you search for “relaxing beach weekend getaways”. Let’s say that you click on an article “10 best beach getaways” from the site Mike’s Vacations. In the article you find many of the destinations to be around the Gulf of Mexico.

A week later, breaking news reports that a massive hurricane is headed for the Gulf but you don’t hear about this news because you’re busy or something.

The next day you dive back into your vacation research making the same query as before. This time you can’t find the result from Mikes Vacations anywhere on the first page, so you click on a different result: “13 stunning beach getaways in the Mediterranean” from Trip Advisor.

While waiting for the page to load you flick on the TV and see the headline. “Storm Update: Hurricane Headed for the Gulf Coast Reaches Category 3.”

Thanks to Google, the article you’re about to read highlights relaxing beach getaways that will not be affected by the hurricane. This saved you time, energy, and the money you might have spent on a worthless plane ticket.

What happened? Google’s algorithms responded to real world events and adjusted the search results accordingly.

Meanwhile, the SEO lead at Mikes Vacations spent his weekend on Twitter creating chatter about a possible unconfirmed Google algorithm update. His beach vacation articles are nowhere to be found!

Danny Sullivan eventually issues a response with guidance:

The guidance:

This has been their guidance since…like forever, and it aggravates many in the SEO community because of how vague and condescending it sounds.

It’s really not though, the internet is massive, and content means something different for everyone. It’s not realistic or scalable for Google to be giving out specific instructions.

Instead, they say to use common sense and do things that are good for your users. It’s our responsibility as SEOs to understand what good means.

A search engine’s job is to show the most relevant and useful information to the users that are searching for something.

You do not need to know how Google’s algorithms work in order to realize that the majority of places featured on your “Top 10 relaxing beach getaways” list are all in the Gulf of Mexico, and since it’s about to be hit by hurricane, you should probably update it with alternative places that your users can safely visit.

Not all rankings factors matter all of the time

The formula for ranking signals depends on the SERP and type of query

The way Google ranks pages largely depends on a lot of factors, but depending on the query, the weight of these factors, and the specific combination needed to deem a site “worthy” can vary. They’re asking: what are the most important attributes for websites to have in this category.

So for a news site, perhaps the number of links to its articles and the number of Twitter followers it has are two good indications of its active readership.

For a local plumber, maybe positive reviews, the relative ease of scheduling services, and the amount of troubleshooting resources they make available are the most important factors. Since most plumbing companies are typically small local outfits, and don’t naturally create a lot of viral content, maybe the number of links it has doesn’t matter all that much.

For the search “Lawyers in New York” if Google believes 90% of these sites have illegitimate backlink profiles comprised of links from PLNs and guest posts, who knows they might just straight up troll them all and rank the site with the least amount of links, because those sites are more likely to be honest.

Not every website needs to do the same thing to improve their rankings

Here’s another John Mueller quote, this time from his closing monologue featured in the same episode of Search Off the Record I cited before.

“You don’t have to just blindly follow just one ranking factor to get to the end result. It’s also not the case that any particular factor within this big network is the one deciding factor, or that you can say that one factor plays a 10% role, because maybe for some sites and some queries it does not play a role, and maybe for other sites and other queries it is the deciding factor. It’s really hard to say kind of how to keep those together.”

Google changes over time to improve their search results. We’ve all heard this a hundred times.

More specifically, “improving their search results” means optimizing how they understand certain types of queries and the formulas used to make different types of search results. This is not a one-and-done kind of thing, Google is always testing.

Therefore, the best way to make sure a website remains in a stable position rankings wise (which we know is never guaranteed), is to always work on improving a wide variety of things so that you’re constantly hitting a number of the different factors.

Your goal should be to make sure that your portfolio of rankings signals is as diverse as possible.

A professional athlete, for example, does a variety of different exercises every time they work out to stay in shape. If you only curl and bench press each time you lift weights, at some point your body will start to look lopsided because you failed to pay attention to your legs.

In the workplace you want diversity on teams so that you get different viewpoints and ultimately make better decisions.

This is the same sort of thing you’d want to see on a good website. So that regardless of how Google’s algorithms change, they can still understand that your website is relevant for a given query in many different ways.

Ultimately, it’s really hard to say that any particular element is this important and has this effect on the search results.

It’s also not very feasible to reverse engineer search results and definitively say “well based on these search results, I can tell that these rankings factors must be the most important because all of the top sites on the page have these qualities”.

Sometimes you can sort of see a trend when it comes to popular domain metrics like Trust Flow, Link Profile Strength, Alexa Rank etc. But it’s not something that you can look at and deduce an ordered list of action items from that you can to check off.

Don’t just focus on one particular thing and then try to make it look natural. You should make sure everything about your site seems like it is natural.

You know which thing I’m talking about ?

It should be natural. That is what users expect when they click on organic results.

Like if for three years a site’s backlink profile hasn’t grown whatsoever and then all of a sudden it starts getting two links per month in blog content from other sites. What does that look like?

If your goal is to build links, your strategy should revolve around making better and more useful content than any other sites in your space. At the same time you should be going pretty hard on social to spread your great new content. Send it to your Mom, your Sister, and your best friends. Ask them to pass it along to anyone they know that might find it useful. I’m dead serious.

The end of our conversation, and the start of yours

We can’t limit our SEO to activities that correspond to known “rankings” factors.

Why?

Refer back to our “relaxing beach getaways” example. Google is currently doing that – dynamically adjusting search results based on real world phenomenon. Are we now going to consider “revising old content to be relevant for the present moment” a rankings factor?

No! Well, at least in my opinion we shouldn’t.

That would be sort of ridiculous, we’ve already been doing this forever. It’s called “evergreen” content, and it’s a staple of basic content strategy. We’ve been doing it because it’s a good idea and just makes sense!

The links I promised earlier

Core Web Vitals (LCP, FID, CLS)